Autonomous Robotics

Designed by You

Large language & visual models drive robotic creatures

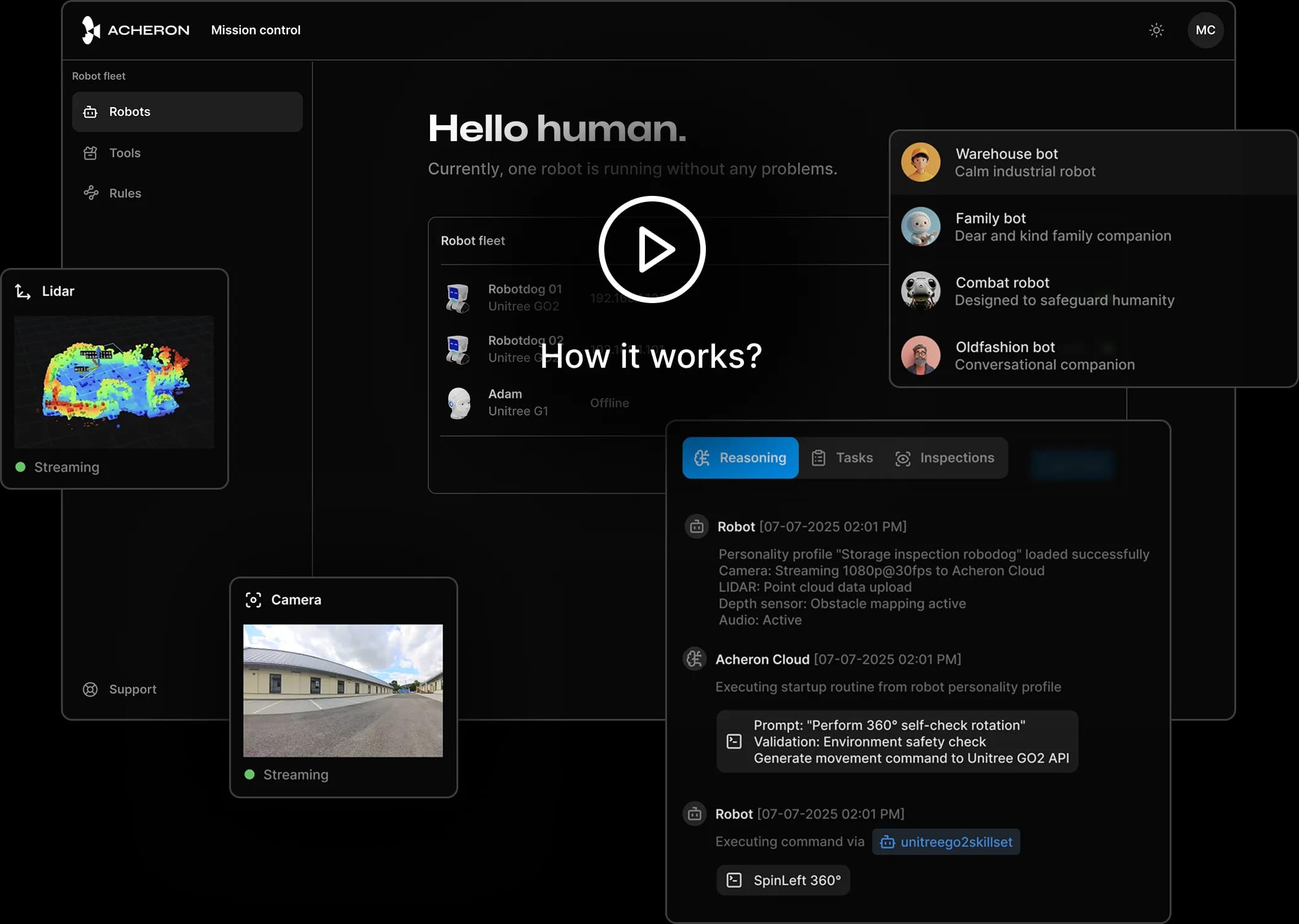

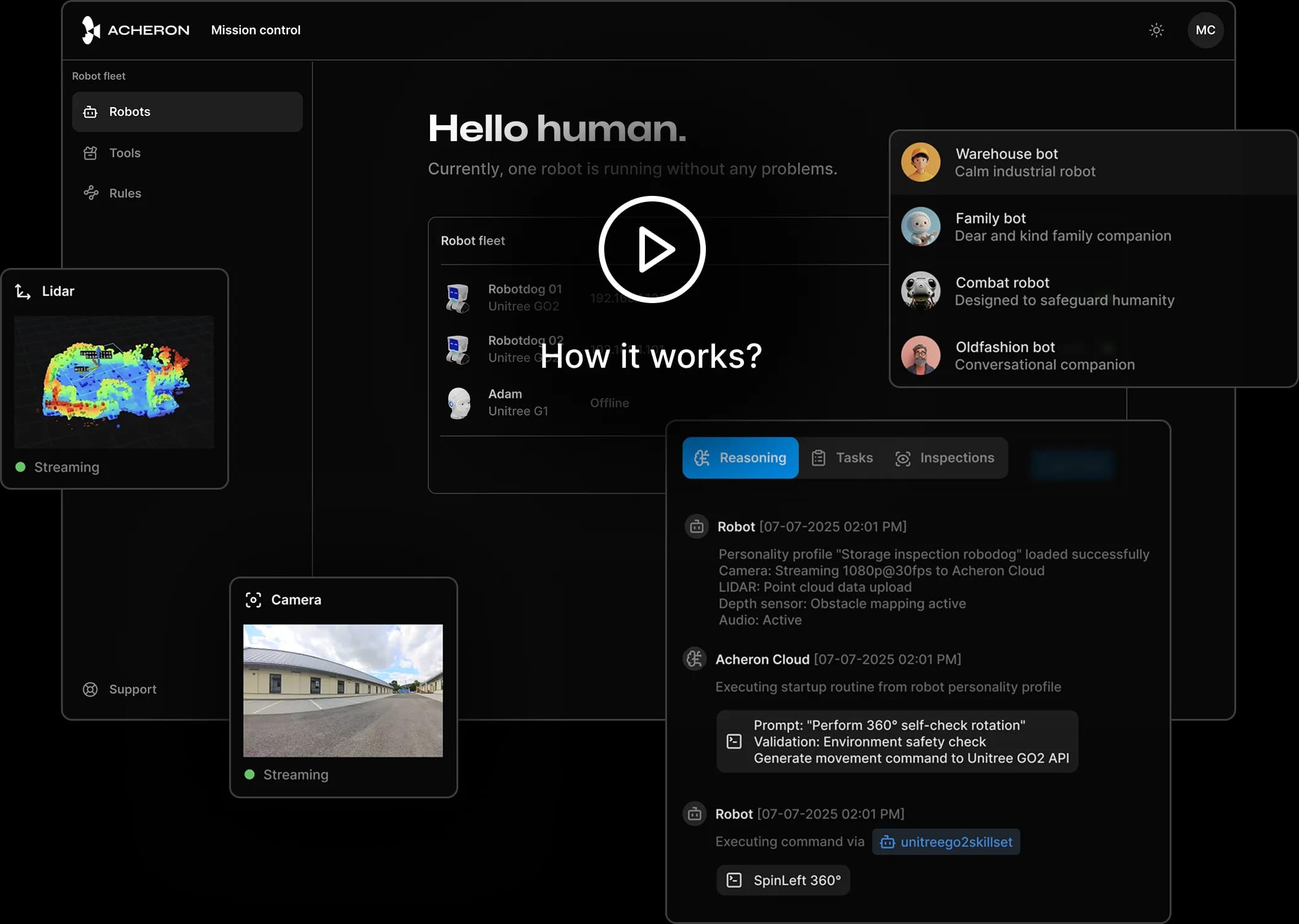

Meet Acheron

Large language & visual models drive robotic creatures

Meet Acheron

Transforming human speech into neurosymbolic control of robots through LLM multi-agents.

The ability to plan, reason spatially, and execute robotic functions or invoke pre-trained behaviors through LLM agents.

Seamlessly move and transform data between different apps and SDK to robot.

Manage your robotic fleet, analyze alerts

and trends, plan

tasks, and evaluate

performance.

Seamlessly connect your apps with living robots—just like n8n.

Turn natural human-like conversation into real robot actions.

Designed for ROS2 robotic creatures.

Unitree G1 - spatial RAG & reasoning, navigation, pick and place

OpenARM - Articulated manipulation based on VLM reasoning

Unitree GO2 - spatial memory and navigation, voice control

Created for your preferred platform